The Artificial Intelligence

of Advanced AMR Robots

Q.AI is the artificial intelligence (AI) software built into each Quasi autonomous robot. It’s the brain behind our AMRs, empowering advanced self-navigation, unparalleled ease-of-use, and extensive insight into operational data.

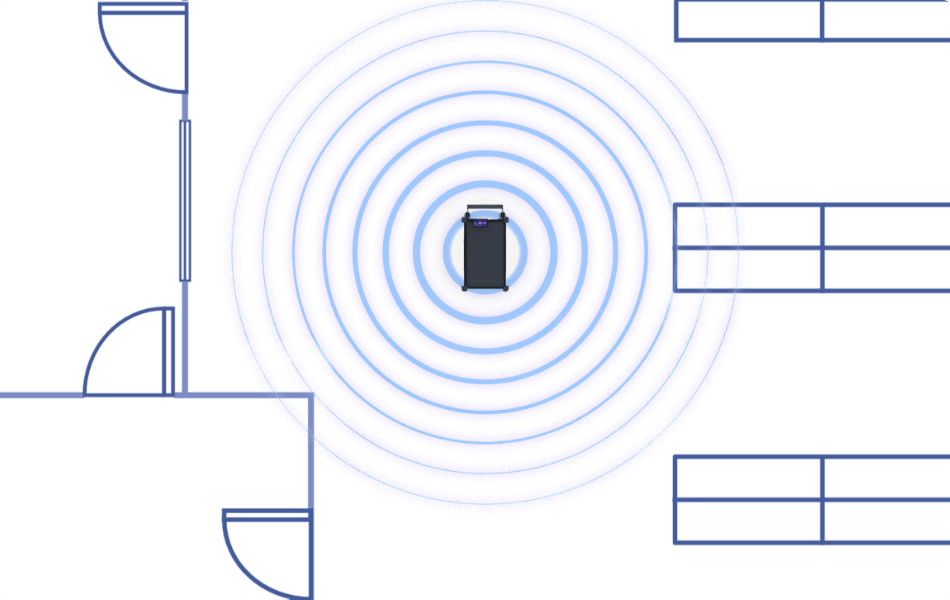

360° LIDAR – Long-Range

Real-Time Area Mapping

Our AMR robots’ LiDAR system continuously scans surroundings in 360°, feeding the data into Q.AI’s live, appearance-based, mapping system. This creates and updates a dynamic reference map, crucial for precise localization, object detection, and reliable navigation.

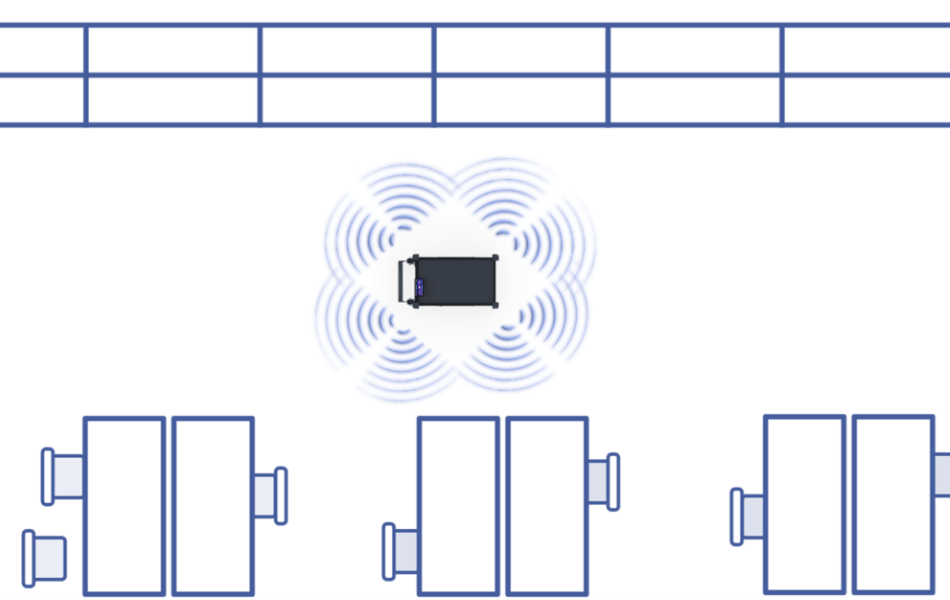

16 tofs – Short-Range

Split-Second Obstacle Avoidance

The Q.AI navigation stack integrates perimeter Time-of-Flight (ToF) sensors to detect objects at close range to Quasi AMRs. This enables instant reaction and avoidance of obstacles, accurate maneuvers in tight spaces, and safe interaction with human coworkers.

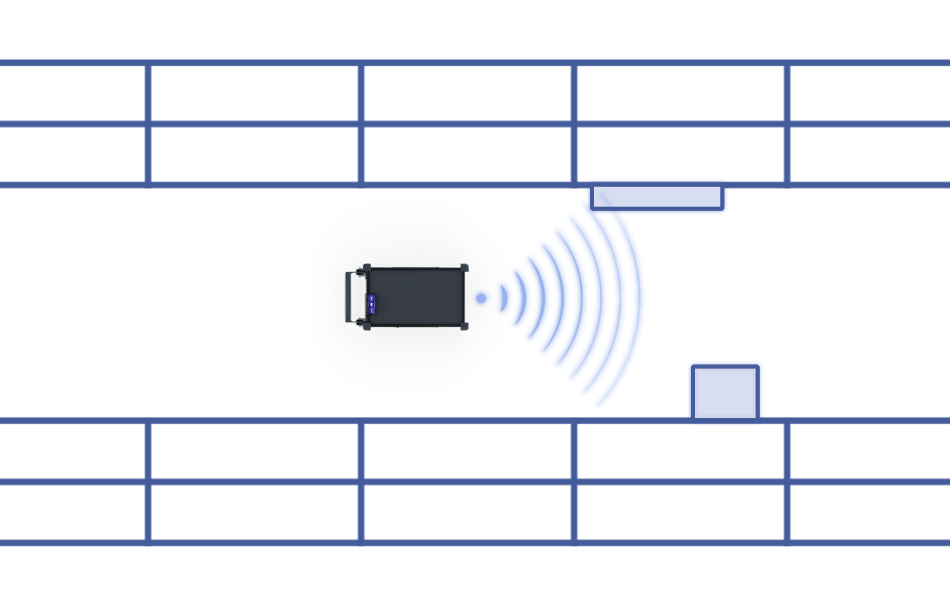

3D Depth camera – localization

Reliable Location & Orientation

3D stereo cameras provide Q.AI with detailed depth information, allowing Quasi AMRs to accurately identify their position based on outside elements, even after a system restart. This ensures uninterrupted operation without lost map data or re-localization, as well exact positioning of each stop.

Q.AI Intelligent Collaboration

Learning & Adaptation

Imbedded machine learning and instant knowledge sharing let your entire Quasi fleet integrate, scale, and grow more reliable with each delivery.

ros 2 robotic system base

Total System & Hardware Integration

We’ve selected ROS 2 as the basis of our automated mobile robot software. This industry-standard platform allows seamless integration between a wide range of software and hardware, ensuring that Quasi AMRs adapt to existing infrastructure and workflows.

Leveraging ROS 2, Q.AI achieves real-time data exchange, precision robotic control, and effortless connection to warehouse and hospital management systems, ERPs, and other critical platforms.

fleet-wide intelligence

Collaborative Fleet Learning

Each completed delivery, avoided obstacle, and taken route adds to the Q.AI knowledge database of your robot. Past experiences are used to refine future navigation strategies and learnings are automatically shared across the entire fleet of robots for fast, global adaptation.

Expand Your Fleet Instantly

Scalability with Zero Downtime

AMRs powered by Q.AI scale easily alongside your growing operations. Instant knowledge transfer syncs facility maps and learned behaviors with new fleet robots in seconds – without needing to remap areas, retrain systems, or scale personnel – for zero downtime and immediate productivity.

Intelligent Reporting

Intelligent Reporting

Another side of Quasi AI data processing capabilities is its intelligent reporting. Next to the ability to process enormous streams of data from various inputs, we’ve added a generalization and learning block.

Quasi AI monitors patterns in data streams and learns to extract information relevant for reporting, auditing and dashboard visualizations.